Online Safety Act: Everything you need to know

No matter your age, your life likely unfolds online. And as younger individuals spend more time in digital spaces, governments around the world are establishing regulations to protect them.

In some countries, like the US, numerous state laws are emerging. In other countries, like the United Kingdom (UK), national regulation has become the answer. Case in point: the UK’s Online Safety Act (OSA), which was written with the goal of protecting children and teens from harmful content online.

Below, we take a closer look at what the OSA is and the types of businesses it regulates. We also discuss the law’s key requirements and steps that businesses can take to become and remain compliant.

What is the Online Safety Act?

The Online Safety Act (OSA) is a UK law designed to “keep the internet safe for children” and give adults more control over their online experiences.

The law consists of more than 200 sections outlining various types of illegal or harmful content, expectations for regulated companies, the consequences of noncompliance, and more. The Office of Communications (Ofcom) is responsible for enforcing the law, providing clarity on its provisions, and overseeing the rollout.

The OSA may affect social media companies, search engines, adult content sites, and other companies with online apps or platforms. And it can impact any regulated service — including those based outside the UK — that has a significant number of UK users, targets the UK, or can be accessed by UK users (if there’s a “material risk of significant harm” to those users).

Learn more: Protecting minors online: An overview of right-to-use regulations worldwide

Which businesses does the Online Safety Act affect?

The Online Safety Act can apply to companies offering services that are available online and either target UK users or have a significant UK user base. It categorizes services into three groups:

User-to-user (U2U) services

Search services

Pornography services

Regulated U2U and search services are also called Part 3 services, and regulated pornographic content providers are also known as Part 5 services.

Use the Ofcom Online Safety Regulation Checker to see if the OSA applies to your organization. Below, we’ll take a closer look at what these groups can include.

User-to-user services (Part 3 service)

A service or platform may qualify as a U2U service if it allows users to generate, upload, or share content that other users can access.

Examples of potential U2U services include:

Social media companies

Online dating services

Forums and message boards

Video- and image-sharing services

Gaming platforms and providers

Messaging apps

Search services (Part 3 service)

Search services include search engines that offer “a service or functionality which enables a person to search some websites or databases.” It can also include “a service or functionality which enables a person to search (in principle) all websites or databases.”

However, search engines that “enable a person to search just one website or database” are not subject to the law.

A company that offers both U2U service and search services is defined as a “combined service.” It must comply with the requirements for both U2U and search services.

Services that provide pornographic content (Part 5 service)

The OSA also affects services that publish or display pornography, which the law describes as “content of such a nature that it is reasonable to assume that it was produced solely or principally for the purpose of sexual arousal.”

Part 5 services are limited to sites that publish their own content. Some websites may offer U2U or search services related to pornography, like platforms that host user-generated content. As a result, they must comply with specific deadlines and requirements for Part 3 services as well.

Learn more: Building your age verification strategy: how to navigate global regulations

Requirements under the Online Safety Act

The obligations that a company must fulfill will depend on the types of services it offers. Some of the Online Safety Act requirements that apply to most of the regulated companies include:

Complete risk assessments for illegal content and quickly remove violations from their platform. This includes content related to terrorism, hate speech, animal cruelty, fraud, selling illegal drugs or weapons, self-harm, child sexual abuse, and revenge pornography.

Complete and record children’s access assessment to determine if children (under 18 years old) can access a service, which is assumed if the service doesn’t have a highly effective age-assurance system (we share the definition of highly effective below). Services that children can access also must assess whether they have or will likely attract a significant number of users who are children.

If children are likely to access the service, the company must:

Complete children’s risk assessments.

Implement age-assurance measures and enforce age limits.

Prevent or minimize the chance that children will access content that is legal but considered harmful. This includes pornography and content that promotes, encourages, or offers instructions for self-harm, eating disorders, or suicide.

Give children age-appropriate access to “priority content,” including content related to bullying, dangerous stunts or challenges, and serious violence.

Clearly explain user protections within the terms of service.

Provide users with ways to submit complaints and report problems.

Balance freedom of expression and privacy rights with safety measures.

Category 1 services (defined below) also have to make it easier for adults to control their online experience. It does this by requiring the service providers to:

Allow users to filter out potentially harmful content they don’t wish to see, such as content involving bullying, misogyny, antisemitism, homophobia, racism, violence, or self-harm

Allow verified users to limit their interactions with unverified users.

Additionally, the law introduced new criminal offenses for individuals who engage in the following behaviors: cyberflashing, threatening communications, intimate image abuse, encouraging or assisting serious self-harm, epilepsy trolling, and sending false information intended to cause non-trivial harm.

Additional categories and requirements

The OSA further categorizes services based on the following conditions:

Category 1: Uses a content recommendation system, and either

Has more than 34 million UK users on the U2U portion of the service, or

Allows users to share user-generated content and has more than 7 million UK users on the U2U portion of the service.

Category 2A: Search services that have more than 7 million UK users. The category doesn’t include vertical search services, which are services that allow users to search for specific products or services, like ticket booking or insurance comparison websites.

Category 2B: Allows users to send direct messages and has more than 3 million UK users on the U2U portion of the service.

Companies that fall into one of these categories will have additional requirements under the law. For example, all three categories require services to comply with additional transparency reporting and disclosures related to deceased children who were users. Category 2A and Category 1 services will have additional requirements.

Age checks and assurance under the Online Safety Act

The Online Safety Act requires every Part 3 business to conduct a children’s access assessment to determine what age checks they need to put in place. Organizations with U2U and search services must conduct separate assessments for each service.

Services that don’t have a highly effective age assurance system and access controls need to conduct a children’s access assessment. The next step depends on the results:

Part 3 services that likely won’t be accessed by children don’t need to check users’ ages. However, they must rerun a children’s access assessment within one year, or sooner if the service changes and children will likely access it.

Part 3 services that children will likely access must complete a children’s risk assessment and implement measures to reduce the risk of harm to children. For example, U2U services may need to implement highly effective age assurance if children may be exposed to harmful priority content.

Part 5 services and Part 3 services with pornographic content must have highly effective age assurance processes. They also can’t encourage users to circumvent an age assurance process or display pornographic content before or during an age check.

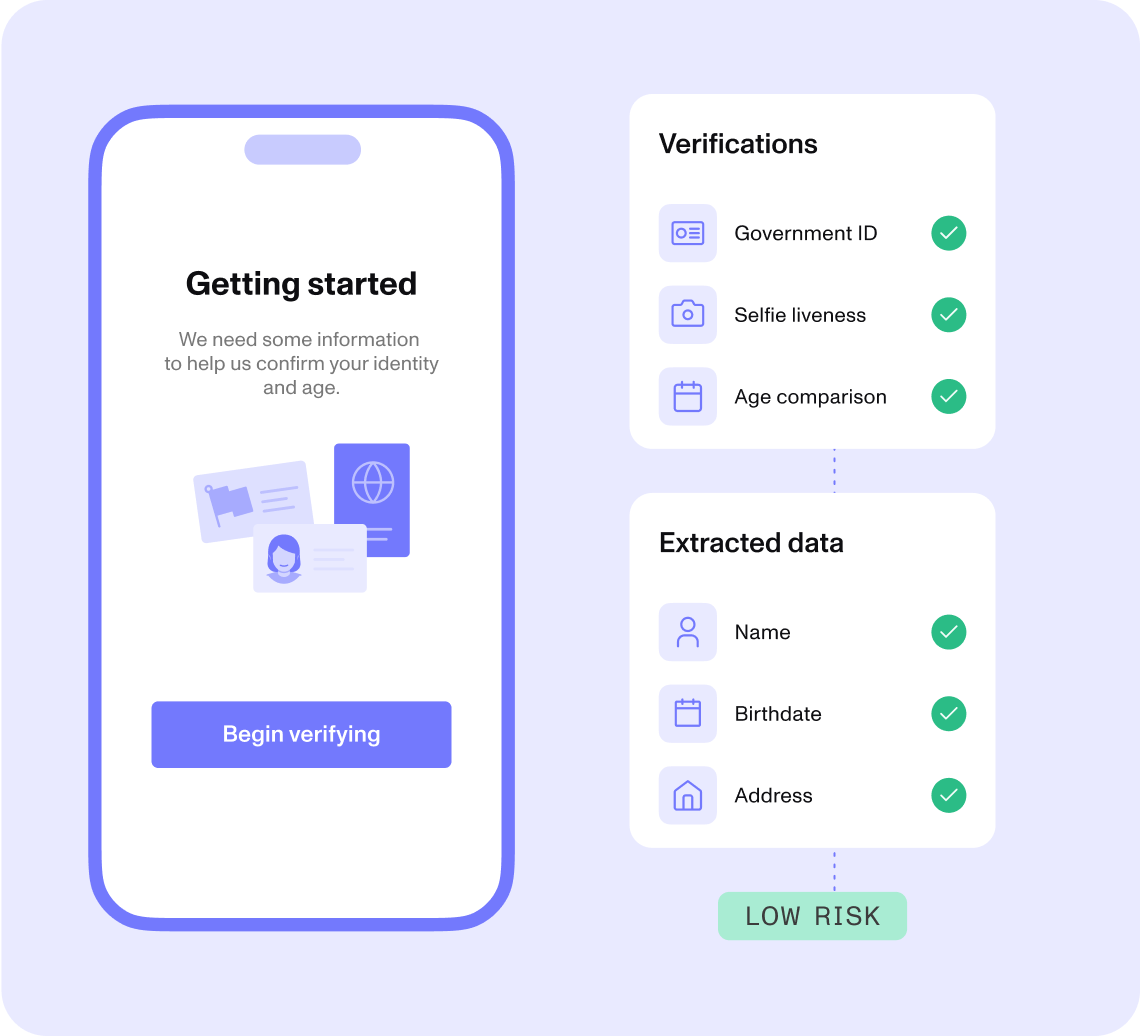

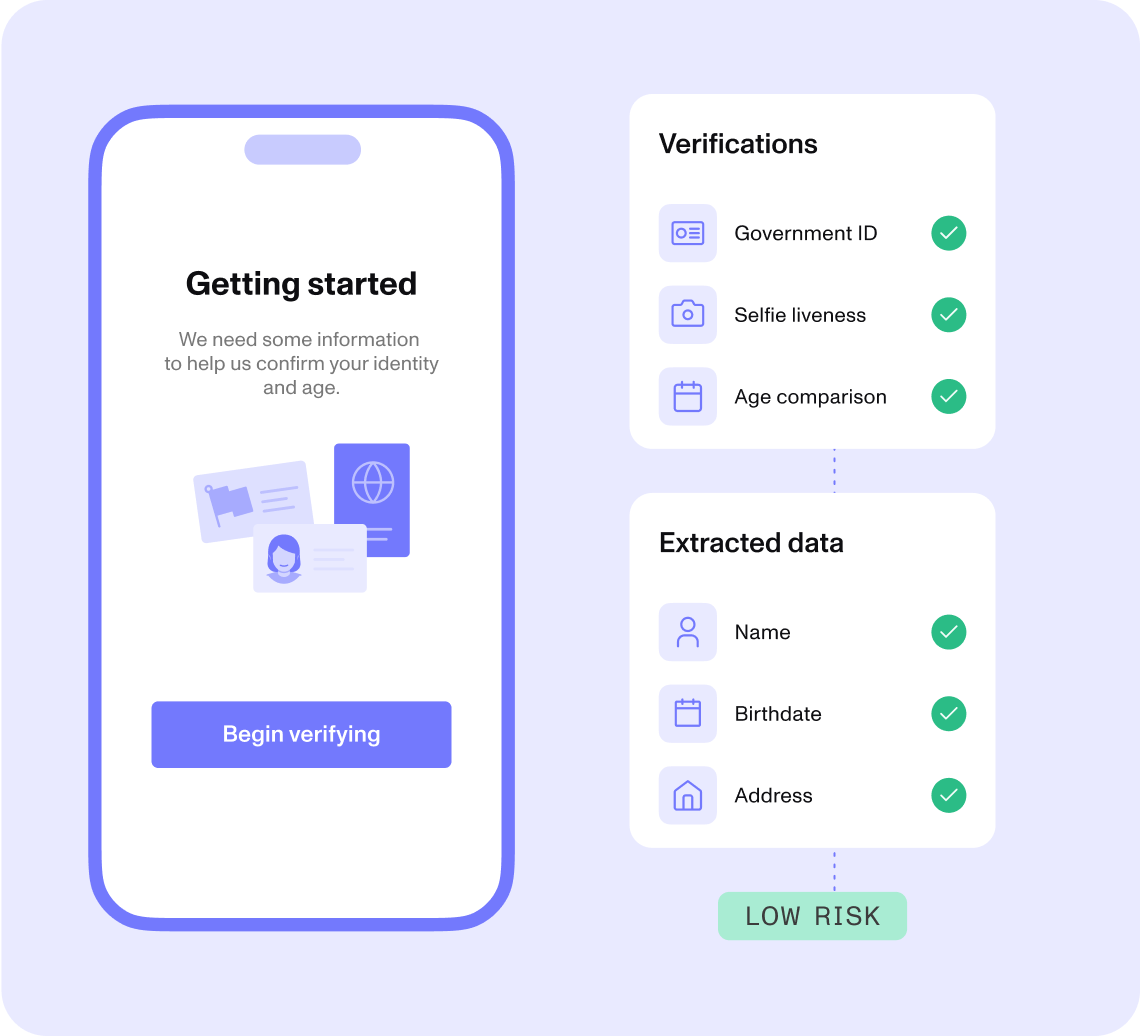

Age assurance methods can include age verification and/or age estimation. However, they must be highly effective.

What is highly effective age assurance?

Ofcom has shared different methods it believes are capable of being highly effective at confirming users’ ages:

Facial age estimation

Open banking data

Digital identity services, such as a digital identity wallet

Credit card age checks

Email-based age estimation

Mobile network operator age checks

Photo-ID matching

Additionally, organizations must ensure that the age assurance method and process they use is technically accurate, robust, reliable, and fair.

Ofcom has also pointed to some types of age assurance that aren't highly effective, including asking users to state their age, requiring payments that don’t depend on users being 18 or older (such as accepting debit cards), and adding an age requirement to the terms of service.

For more information and guidance, check the Ofcom site, which includes specific guides for Part 3 and Part 5 services.

Learn more: How to implement an age verification system for your business

Complying with the Online Safety Act

Although the Online Safety Act lays out many guidelines and goals, it doesn’t require organizations to use specific technologies or measures to assess age and implement age-appropriate access.

While some organizations welcome the flexibility, the ambiguity can also make it difficult to strike a balance between compliance and business goals. In general, we think organizations will be in a good position if they:

Review potential providers’ third-party certifications. Regulators and third-party organizations like the Age Check Certification Scheme review and certify solution providers and their verification methods. Check potential providers’ age assurance and data privacy and security certifications when comparing the options.

Align your checks and requirements with your risk tolerance. After completing the required risk assessments for OSA compliance, consider your organization’s priorities and risk appetite. Do you want to emphasize growth and access with less rigorous, but still compliant, age assurance checks? Or, do you want to go beyond the minimum requirements and use more stringent checks?

Support multiple verification options for users. Find providers who offer multiple age verification methods that are capable of being highly effective and let users choose which one to use.

Create dynamic flows that adjust your required checks in real time. Balance conversion and assurance in real time with flows that adapt based on users’ location, age, and risk signals. For example, you might start with a low-friction mobile operator age check and then step up to a government ID check or selfie age estimation based on the results.

Adhere to data minimization principles. Don’t collect or store more customer data than you need for an age assessment.

Let your provider be the expert. Find a solution provider that monitors and proactively creates systems to comply with existing and upcoming OSA requirements. Some providers can also safely store and delete the information.

Want to learn more about how Persona can help you comply with the OSA and remain flexible enough to quickly adapt to new secondary legislation? Get a custom demo today.

FAQs

How is the Online Safety Act being implemented?

Toggle description visibility

Ofcom is responsible for implementing the OSA. It maintains a list of important dates for Online Safety compliance with links to official statements, guides, and other helpful resources.

Who is responsible for enforcing the Online Safety Act?

Toggle description visibility

The Online Safety Act is enforced by the Office of Communications, or Ofcom, which is responsible for overseeing all regulated communication services, including television, radio, broadband, and home and mobile phone services. The law also grants the UK’s Secretary of State a number of powers to direct Ofcom in its enforcement duties.

What are the penalties for not complying with the Online Safety Act?

Toggle description visibility

Services that fail to comply with the Online Safety Act may face fines of up to £18 million or 10% of their global annual revenue, whichever is greater. However, there are some exceptions for organizations with a small UK presence. Additionally, in some circumstances, Ofcom can ask a court to prevent a website or app from being available in the UK.

Executives and senior management may also be held criminally liable if they are notified by Ofcom about instances of child exploitation and sexual abuse and fail to remove the related content. Failure to answer information requests from Ofcom may also result in prosecution, with the possibility of jail time.

What is the difference between the Online Safety Act and the Digital Services Act?

Toggle description visibility

The Online Safety Act is a UK law primarily affecting search engines, various types of online platforms, and sites that publish pornographic content. It has many requirements related to shielding users from content that is illegal, age-restricted, potentially harmful, or unwanted by the user. Many businesses with services accessible by UK users are subject to the law, regardless of where the businesses are based.

The Digital Services Act is a somewhat comparable law in the European Union. Like the OSA, its requirements apply to search engines, social media platforms, and other services that allow for content sharing. But it also applies to online marketplaces. Many of its requirements are similar to those of the OSA. This includes scanning for and removing illegal or harmful content, giving users a means of reporting such content, and more.